Generalized Bhattacharyya and Chernoff upper bounds on Bayes error using quasi-arithmetic means

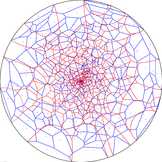

(vector graphics HVD.KP.pdf), Hyperbolic Voronoi diagrams (upper space, etc.)

(vector graphics HVD.KP.pdf), Hyperbolic Voronoi diagrams (upper space, etc.)

Some R tutorial/code for computing the Jeffreys centroid (symmetric Kullback-Leibler divergence, SKL).

This web site is currently heading for a renewal. Stay tuned...

Computational Information Geometry

Computational information geometry deals with the study and design of efficient algorithms in information spaces using the language of geometry (such as invariance, distance, projection, ball, etc). Historically, the field was pioneered by C.R. Rao in 1945 who proposed to use the Fisher information metric as the Riemannian metric. This seminal work gave birth to the geometrization of statistics (eg, statistical curvature and second-order efficiency). In statistics, invariance (by non-singular 1-to-1 reparametrization and sufficient statistics) yield the class of f-divergences, including the celebrated Kullback-Leibler divergence. The differential geometry of f-divergences can be analyzed using dual alpha-connections. Common algorithms in machine learning (such as clustering, expectation-maximization, statistical estimating, regression, independent component analysis, boosting, etc) can be revisited and further explored using those concepts. Nowadays, the framework of computational information geometry opens up novel horizons in music, multimedia, radar, and finance/economy. | Participate to the Léon Brillouin seminar on information geometry sciences ! |

Subscribe to the infogeo

mailing-list for announcements (conferences, libraries, positions, etc) Subscribe to the infogeo

mailing-list for announcements (conferences, libraries, positions, etc) |

What is new? What is new?

|

*** To open in August/September 2011 ***

blog

or twitter threads

blog

or twitter threads  FrnkNlsn

FrnkNlsn

|

Consider taking the quizzs: quizz 1 quizz 2 quizz 3 quizz 4 |

private area

private area

The field of computational information geometry (discrete information geometry) is interested in exploring the following domains:

- Generic algorithms: Design meta-algorithms that can handle any arbitrary parameterized distance or loss function.

(The usual class of parameterized distances are Bregman, Csiszar and Burbea-Rao divergences.)

Examples: K-means, expectation maximization (EM), Voronoi diagrams, barycenters, smallest enclosing balls, ball trees, etc. - Geometry of information.

Examples: Dually flat spaces of exponential/mixture families (VC-dimension of balls remains unchanged to d+1), Riemmanian geometry, Finsler geometry (HARDI datasets), etc.

- Novel applications.

Examples: Statistics, machine learning, computational geometry

Let us give some examples of information manifolds:

- Statistical manifolds (parametric distributions),

- Neural manifolds (Boltzmann machines with fixed topology, i.e., number of nodes),

- ARMA(p,q) time-series manifolds (e-flat=-1-flat)

- Multiterminal problems met in information theory,

- Linear programming problems (e.g., continuous Karmarkar inner method walking along the m-geodesic),

- Clustering (negative entropy and dual Legendre log-normalizer conjugate for soft/hard clustering).

Publications

Journals- Hierarchical Gaussian Mixture Model (2010) International Conference on Acoustics, Speech and Signal Processing (ICASSP) [bib] [pdf][slides]

- Hyperbolic Voronoi diagrams made easy (2010) International Conference on Computational Sciences and Its Applications (ICCSA) [bib] [pdf]

- Boosting $k$-NN for categorization of natural scenes (2010) Computing Research Repository (CoRR) [bib] [pdf]

- Statistical exponential families: A digest with flash cards (2009) Computing Research Repository (CoRR) [bib] [pdf]

- Steering self-learning distance algorithms (2009) Communications of the ACM [bib] [pdf]

- Bregman divergences and surrogates for learning (2009) IEEE Transactions on Pattern Matching and Machine Intelligence [bib] [pdf]

- Approximating smallest enclosing balls with applications to machine learning (2009) International Journal on Computational Geometry and Applications [bib] [pdf]

- Levels of details for Gaussian mixture models (2009) Ninth Asian Conference on Computer Vision (ACCV) [bib] [pdf][poster]

- Opinion on "Open, Closed, or Clopen Access" (2009) [bib]

- Gaussian mixture models via entropic quantization (2009) 2009 European Signal Processing Conference (EUSIPCO) [bib] [pdf]

- Sided and Symmetrized Bregman Centroids (2009) IEEE Transactions on Information Theory [bib] [pdf]

- Accuracy of distance metric learning algorithms (2009) Workshop on Data Mining using Matrices and Tensors (DMMT) [bib] [pdf][slides]

- The dual Voronoi diagrams with respect to representational Bregman divergences (2009) International Symposium on Voronoi Diagrams (ISVD) [bib] [pdf][slides]

- Bregman vantage point trees for efficient nearest neighbor queries (2009) IEEE International Conference on Multimedia and Expo (ICME) [bib] [pdf]

- Searching high-dimensional neighbours: CPU-based tailored data-structures versus GPU-based brute-force method (2009) Computer Vision / Computer Graphics Collaboration Techniques and Applications (MIRAGE) [bib] [pdf][slides]

- Hyperbolic Voronoi diagrams made easy (2009) Computing Research Repository (CoRR) [bib] [pdf]

- Tailored Bregman ball trees for effective nearest neighbors (2009) European Workshop on Computational Geometry (EuroCG) [bib] [pdf][slides]

- A volume shader for quantum voronoi diagrams inside the 3D Bloch ball (2009) ShaderX7: Advanced Rendering Techniques [bib]

- Information geometries and microeconomic theories (2009) Computing Research Repository (CoRR) [bib] [pdf]

- Emerging trends in visual computing (2009) [bib] [pdf]

- Computational information geometry: Pursuing the meaning of distances (2009) Open Systems Science [bib]

- Bregman sided and symmetrized centroids (2008) International Conference on Pattern Recognition (ICPR) [bib] [pdf]

- On the efficient minimization of classification calibrated surrogates (2008) Neural Information Processing Society (NIPS) [bib] [pdf]

- Abstracts of the LIX fall colloquium 2008: Emerging trends in visual computing (2008) Emerging trends in visual computing (ETVC) [bib] [pdf]

- Intrinsic geometries in learning (2008) Emerging trends in visual computing (ETVC) [bib] [pdf]

- Soft Uncoupling of Markov Chains for Permeable Language Distinction: A New Algorithm (2008) Computing Research Repository (CoRR) [bib] [pdf]

- Quantum Voronoi diagrams and Holevo channel capacity for $1$-qubit quantum states (2008) IEEE International Symposium on Information Theory (ISIT) [bib]

- The entropic centers of multivariate normal distributions (2008) European Workshop on Computational Geometry (EuroCG) [bib] [pdf]

- Quantum Voronoi diagrams (2008) European Workshop on Computational Geometry (EuroCG) [bib] [pdf][slides]

- An interactive tour of Voronoi diagrams on the GPU (2008) ShaderX6 [bib]

- A Volume shader for quantum Voronoi diagrams inside the 3D Bloch ball (2008) ShaderX7 [bib]

- On the smallest enclosing information disk (2008) Information Processing Letters [bib] [pdf]

- Les (tr\`es) nombreuses \'epingles algorithmiques de la meule de surrog\'ees (2008) Conference francophone sur l'apprentissage automatique (CAp) [bib]

- On the centroids of symmetrized Bregman divergences (2007) Computing Research Repository (CoRR) [bib] [pdf]

- Bregman Voronoi diagrams: Properties, algorithms and applications (2007) CoRR [bib] [pdf]

- Visualizing Bregman Voronoi diagrams (2007) Symposium on Computational Geometry (SoCG) [bib] [pdf]

- A real generalization of discrete AdaBoost (2007) Artificial Intelligence [bib] [pdf]

- Real boosting a la carte with an application to boosting oblique decision tree (2007) International Joint Conference on Artificial Intelligence (IJCAI) [bib] [pdf]

- On Bregman Voronoi diagrams (2007) Symposium on Discrete Algorithms (SODA) [bib] [pdf][slides]

- On weighting clustering (2006) IEEE Transactions on Pattern Analysis and Machine Intelligence [bib] [pdf]

- On the smallest enclosing information disk (2006) Canadian Conference on Computational Geometry (CCCG) [bib] [pdf]

- A real generalization of discrete AdaBoost (2006) European Conference on Artificial Intelligence (ECAI) [bib]

- Soft uncoupling of Markov chains for permeable language distinction: A new algorithm (2006) European Conference on Artificial Intelligence (ECAI) [bib]

- On approximating the smallest enclosing Bregman balls (2006) Symposium on Computational Geometry (SoCG) [bib] [slides]

- Fitting the smallest enclosing Bregman ball (2005) European Conference on Machine Learning (ECML) [bib] [pdf][slides]

Conferences

- LIX Colloquium ETVC'08 (LNCS 5416, lecture videos online)

- Information Geometry and its Applications

- MaxEnt 2010

- 1st International Workshop on Advanced Methodologies for Bayesian Networks (AMBN 2010)

- ISVD

- James L. Massey paper archive http://gcoe.mims.meiji.ac.jp/ Feature in computer vision http://svr-www.eng.cam.ac.uk/~er258/work/fast.html Information Theory and Applications Center http://ita.ucsd.edu/members.php http://www.cis.upenn.edu/~cis610/ (Jean Gallier) http://www.mcfund.or.jp/ http://www.ajinomoto.com/about/rd/index.html http://www.nec.co.jp/rd/en/ccil/ Keiji Yamada http://www2.warwick.ac.uk/fac/sci/statistics/crism/workshops/ SIAM. J. Matrix Anal. & Appl. (SIMAX) http://www.siam.org/journals/simax/authors.php Journal of Elasticity, Springer http://www.springer.com/physics/classical+continuum+physics/journal/10659 Rajendra Bhatia http://www.isical.ac.in/~kushari/faculty.html OJALGO http://ojalgo.org/generated/org/ojalgo/optimisation/quadratic/QuadraticSolver.html http://www.dm.unipi.it/~ilas2010/index.php?page=plenary#min07 http://developer.download.nvidia.com/compute/cuda/3_0/sdk/website/OpenCL/website/Computer_Vision.html International Journal of Computational Intelligence Research (1) International Journal of Computer Mathematics (1)

- Google books

- Computational Geometry

- Java Processing Language and "> processing.js (for web graphics)

- Processing PDF library

- Image and Vision Computing (Springer journal)

- The Gauge Connection (Blog of Marco Frasca)

- IRS'11 (International Radar Conference) http://www.cs.tut.fi/~timhome/tim.htm http://through-the-interface.typepad.com/ IEEE Trans. on Signal Processing (Correspondence) http://www.signalprocessingsociety.org/publications/periodicals/tsp/ naist.ac.jp http://www.cs.waseda.ac.jp/eng/faculty/index.html http://spiderman-2.laas.fr/JRL-France/presentation.htm http://cglearning.eu/ http://press.princeton.edu/titles/8860.html

- Japan prize/A> http://press.princeton.edu/ http://www.e-rad.go.jp/

- IEEE Japan/A>

- IEEE Signal Processing

- Information Science at TITECH

- HVRL Keio, Prof. Hideo Saito (Yagami campus), HVRL.

- Computational and Conformal Geometry (2007)

- Computational Conformal Geometry (book)

- Geometric Algebra Computing

Software

- jMEF: A java library for mixtures of exponential families (with a Matlab wrapper)

- Institute of Statistical Mathematics (ISM, Tachikawa, Tokyo, Japan)

- Research Organization of Information and Systems (Kamiyacho, Tokyo, Japan)

- National Institute of Polar Research

- National Institute of Informatics

- Institute of Statistical Mathematics

- National Institute of Genetics

- Transdisciplinary Research Integration Center

- Database Center for Life Science

- C.R.Rao's Advanced Institute of Mathematics, Statistics and Computer Science (AIMSCS)

- Beijing Institute of Technology (BIT)

- The Japan Academy

- Hironaka Heisuke (Fields medal, 1970)

- Kashiwara Masaki (French academy of sciences)

- Mori Shigefumi (Fields medal, 1990)

- TRECVID video datasets

- CAVIAR people video datasets

- ModelDB (for neuroscience)

- DML-JP, Japanese Digital Mathematics Library

Online December 2007. Last updated, January 2011.

© 2007-2011 Frank NIELSEN, All rights reserved.