10h-12h : Michel Broniatowski (UPMC), Echantillonnage pondéré, maximum de vraisemblance et divergences. (abstract) 12h-14h : Pause. 14h-16h : Michaël Aupetit (CEA), Un modèle génératif pour l'apprentissage automatique de la topologie d'un nuage de points. (abstract)

- GRETSI 2011 (5-8 Septembre 2011, SS2 - Science géométrique de l'information)

- SMAI 2011 (23-27 Mai 2011, mini-symposium)

10h-12h : Professeur Alfred Hero (Extracting correlations from random matrices: phase transitions and Poisson limits, abstract) 12h-14h : Pause. 14h-16h : Professeur Christophe Vignat (Caractérisations géométriques des lois à entropie généralisée maximale, abstract)

Programme

10h-12h : Professeur Asuka Takatsu: Wasserstein geometry of the space of Gaussian measures (abstract, paper: WASSERSTEIN GEOMETRY OF GAUSSIAN MEASURES) 12h-14h : Pause déjeuner 14h-16h : Professeur Wilfrid Kendall: Riemannian barycentres: from harmonic maps and statistical shape to the classical central limit theorem (abstract)

- Xavier Pennec : Current Issues in Statistical Analysis on Manifolds for Computational Anatomy (abstract)

- Frank Nielsen : The Burbea-Rao and Bhattacharyya centroids (slides)

10h - 12h: Xavier Pennec (partie I), Current Issues in Statistical Analysis on Manifolds for Computational Anatomy 12h - 14h: déjeuner 14h - 16h: Xavier Pennec (partie I), Current Issues in Statistical Analysis on Manifolds for Computational Anatomy 16h - 16h30: Pause café 16h30-17h30: Frank Nielsen, The Burbea-Rao and Bhattacharyya centroids (vidéo)

- No. 1: Vendredi 22 Octobre 2010, début à 10h.

Programme:

- 10h - 12h: Jean-François Bercher, Some topics on Rényi-Tsallis entropy, escort distributions and Fisher information

- 12h - 14h: pause déjeuner

- 14h - 16h: Jean-François Cardoso, Geométrie de l'Analyse en Composantes Indépendantes

Abstracts:

10h-12h: Some topics on Rényi-Tsallis entropy, escort distributions and Fisher information

Jean-François Bercher

http://www.esiee.fr/~bercherjWe begin by recalling a source coding theorem by Campbell, which relates a generalized measure of length to the Rényi-Tsallis entropy. We show that the associated optimal codes can easily be obtained using considerations on the so-called escort-distributions and that this provide an easy implementation procedure. We also show that these generalized lengths are bounded below by the Rényi entropy.

We then discuss the maximum entropy problems associated with Rényi Q- entropy, subject to two kinds of constraints on expected values. The constraints considered are a constraint on the standard expectation, and a constraint on the generalized expectation as encountered in nonextensive statistics. The optimum maximum entropy probability distributions, which can exhibit a power-law behaviour, are derived and characterized. The Rényi entropy of the optimum distributions can be viewed as a function of the constraint. This defines two families of entropy functionals in the space of possible expected values. General properties of these functionals, including nonnegativity, minimum, convexity, are documented. Their relationships as well as numerical aspects are also discussed. Finally, we examine some specific cases for the reference measure Q(x) and recover in a limit case some well-known entropies.

Finally, we describe a simple probabilistic model of a transition between two states, which leads to a curve, parametrized by an entropic index q, in the form of a generalized escort distribution. In this setting, we show that the Rényi-Tsallis entropy emerges naturally as a characterization of the transition. Along this escort-path, the Fisher information, computed with respect to the escort distribution becomes an “escort-Fisher information”. We show that this Fisher information involves a generalized score function, and appears in the entropy differential metric associated to Rényi entropy. We also show that the length of the escort-path is related to Jeffreys' divergence. When q varies, we show that the paths with minimum Fisher information (respectively escort-Fisher information) and a given q-variance (resp. standard variance) are the paths described by q-gaussians distributions. From these results, we obtain a generalized Cramér-Rao inequality. If time permits, we will show that these results can be extended to higher order q-moments.

14h-16h: Geométrie de l'Analyse en Composantes Indépendantes

Jean-François Cardoso

http://perso.telecom-paristech.fr/~cardoso/

L'Analyse en Composantes Indépendantes (ACI) consiste à déterminer la transformation linéaire d'un ensemble de N signaux (ou N images, ou toutes données N-variées) qui fournisse des composantes "aussi indépendantes que possible". Une mesure naturelle d'indépendance est l'information mutuelle (étendue à N variables). L'étude de ce problème, aux nombreuses applications, fait apparaitre, en sus de l'information mutuelle, d'autres quantités définies dans le langage de la théorie de l'information: entropie et non gaussianité dans le cas de modèles i.i.d., diversité spectrale dans le cas de modèles gaussiens stationnaires, etc...

Suivant une suggestion d'Amari, j'ai mis à jour la structure géométrique de l'ACI. Mon intervention prendra comme point de départ l'étude de la vraisemblance des modèles statistiques d'ACI, ce qui nous mènera très directement à l'élucidation des critères ACI et de leurs inter-relations en termes de la géométrie de l'information.

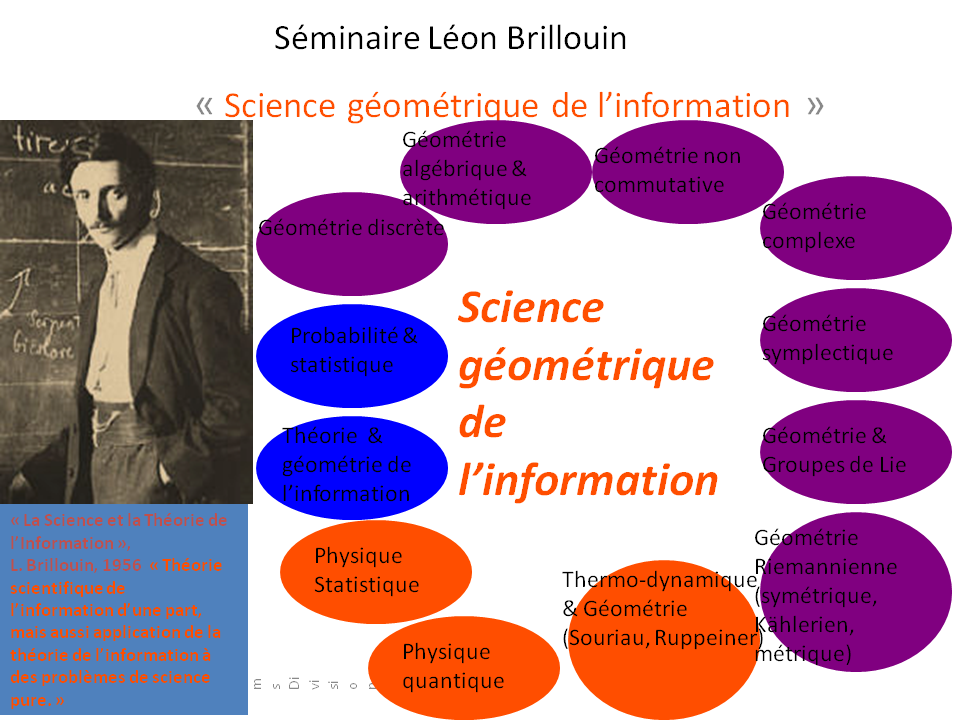

Profil de Léon Brillouin

Léon Nicolas Brillouin (1889-1969) a publié la traduction de son ouvrage en anglais "Science and Information Theory" (1956) en français en 1958: "La science et la théorie de l'information" (traduction par M. Parodi) (google book)

- Préface (à l'édition française) suivi du chapitre introduction,

- BIBNUM

- Analyse des contributions de Léon Brillouin

- La biographie par la National Academy of Sciences (USA).

- Frédéric Barbaresco (Thales)

- Arshia Cont (IRCAM)

- Frank Nielsen (École Polytechnique/LIX, Sony CSL)

- IRCAM (Arshia Cont, Gérard Assayag, Arnaud Dessein)

- École Polytechnique (Frank Nielsen, Sylvain Boltz, Olivier Schwander)

- Mines ParisTech (Pierre Rouchon, Silvère Bonnabel, Jesus Angulo)

- Telecom ParisTech (Hugues Randriam)

- SUPELEC (Mérouane Debbah, Romain Couillet)

- UTT Troyes (Hichem Snoussi)

- Université de Poitiers (Marc Arnaudon, Le Yang)

- Université de Montpellier (Michel Boyom, Paul Bryand)

- Observatoire de Nice (Cédric Richard)

- INRIA (Jean-Paul Zolesio, Rama Cont, Xavier Pennec)

- Thales (Frederic Barbaresco, Jean-Francois Marcotorchino, Francois Gosselin)

- ...

Vous pouvez aussi rejoindre la liste de diffusion internationale.

Manifestations passées :

Quelques articles historiques sur la borne de Fréchet-Darmois (appelé borne de Cramer-Rao) et autres contributions majeures de Maurice Fréchet- Comparaison des diverses mesures de la dispersion, Maurice Fréchet, Statistical Institute, Vol. 8, No. 1/2 (1940), pp. 1-12

- Sur l'extension de certaines évaluations statistiques au cas de petits échantillons, Maurice Fréchet, Statistical Institute, Vol. 11, No. 3/4 (1943), pp. 182-205

- Sur les limites de la dispersion de certaines estimations, Georges Darmois Statistical Institute, Vol. 13, No. 1/4 (1945), pp. 9-15

- Sur la distance de deux lois de probabilité, Maurice Fréchet, Académie des Sciences, 4 February 1957.

- Les éléments aléatoires de nature quelconque dans un espace distancié, Maurice Fréchet, Annales de l'institut Henri Poincaré, 10, no. 4, (1948), p. 215-310.

Evénements

- Anomalous Statistics, Generalized Entropies, and Information Geometry, March 2012, Nara, Japan.

- Mathematics of Distances and Applications, July 2012, Varna, Bulgaria.

© 2010, 2012 Frank Nielsen. Tous droits réservés.